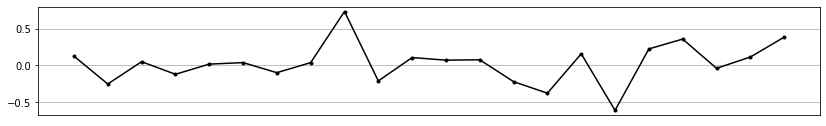

In 2017, the Economist published a headline that has become famous among data aficionados – Data is the new oil. It referred to the many new opportunities businesses and organisation can reach when beneficially utilising the data from their customers or partners that is newly generated every day. In many cases, we have tried and tested statistical and mathematical methods that have seen use for decades. If data is neatly stored in a relational database and takes the form of numbers and the occasional categorical variable, we can use a multitude of statistical methods to analyse and gain insight from the data. However, the real world is always messier than we would like it to be, and this applies to data as well: Forbes magazine estimates 90% of data generated every day is unstructured data – that is text and images, videos and sounds, in short, all data that we cannot utilise using standard methods. Natural Language Processing (NLP) is a way of gaining insight into one category of unstructured data. The term describes all methods and algorithms that deal with processing, analysing and interpreting data in the form of (natural) language. One key field of NLP is Sentiment Analysis – that is to analyse a text for the sentiment contained in or the emotions evoked by the text. Sentiment Analysis can be used to classify a text according to some metric such as polarity – is the text negative or positive – or subjectivity – is the text written subjectively or objectively. While traditionally, its applications have been focussed on social media – classifying for instance a brand’s output on social media or analysing the public’s reaction to the web presence – they are certainly not limited to the digital sphere. An NGO communicating with their supporters in ways such as direct mailing could for example use Sentiment Analysis to examine their mailings and investigate the response they result in. A frequently employed yet quite simple way of undertaking Sentiment Analysis is to use a so-called Sentiment Dictionary. This is simply a dictionary of words receiving a score according to some metric related to sentiment. For instance, for scoring a text for its polarity one would use a dictionary allocating to each word a continuous score in some symmetric interval around zero, where the ends of the interval correspond to strictly negative or strictly positive words, and more neutral words group around the midpoint. There are very different versions of Sentiment Dictionaries, depending on context and most importantly language – while there is some choice for English, this diminishes for other languages. A collection of dictionaries in the author’s native German for example can be found here. As a brief example for Sentiment Analysis in action, consider the following diagram, showing a combined polarity score for some direct mailing activities of an NGO in a German-speaking country over one year, whereby each maling received a score between -1 (very negative) and +1 (very positive). This scoring was undertaken using the SentiWS dictionary of the University of Leipzig. A very different approach relies on the use of word embedding. Word embedding is a technique whereby the individual words in a text are represented as high-dimensional vectors, ideally in a way that certain operations or metrics in the vector space correspond to some relation on the semantic level. This means for example that the embedding group words occurring in similar circumstances or carrying similar meaning or sentiment closely together in the vector space, that is to say, their distance measured by some metric is small. Another illustrative example is the following: Consider an embedding ϕ and the words “king”, “woman” and “queen”, then ideally, ϕ(“king”) + ϕ(“woman”) ≈ ϕ(“queen”). To put this in words, the sum of the vectors for king and woman should be roughly the same as the vector for queen, who is of course in some ways a “female king”. The semantic relation thus corresponds to an arithmetic relation in the vector space. To better visualise these relationships on the vector level, consider the following illustrations, taken from Google's Machine Learning crash course that show the vector representations of some words in different semantic contexts, represented for better readability in a three-dimensional space (actual embeddings usually map into much higher dimensional vector spaces, upwards of a few hundred dimensions).  Example visualisation of word embeddings in the three-dimensional space Example visualisation of word embeddings in the three-dimensional space There are multiple ways to construct such an embedding, i.e. to receive an optimal vector representation for every word in the text corpus, most of which rely on neural nets. Due to the huge computational cost involved, many users opt for transfer learning. This means using a pre-trained model architecture and finish training on the data set – in an NLP setting, the text corpus – at hand, which is both more efficient and produces more accurate results than training a model architecture “from scratch”. Python users might find this resource providing a huge number of pre-trained models and frameworks to continue training very interesting. Word embedding is principally a way to take unstructured text data and transform it into a structured form – vectors on which computers can perform operations. One can then go on and use this vectorised representation of the text to use different methods to gain insight from the written text. For instance, when performing Sentiment Analysis, one can use a Machine Learning model of choice – typically a neural net – to classify the text according to some metric of sentiment – say, polarity – using the vector representation of the text corpus. The field of NLP has in recent years become one of the fastest moving and most researched fields of Data Science – the methods we can employ today have evolved and improved tremendously compared to just a short time ago, and are bound to do so again in the recent future. For a data nerd, this is interesting in and by itself; but different actors, especially in the not-for-profit sector, are well advised to keep an open eye on these developments and consider in which ways they can help them in their work.

1 Comment

|

Categories

All

Archive

June 2024

|